AI for better or for worse, or AI at all?

When I was a little girl, I was taught a song about a ball of white string, in which the white string could fix everything — tie a bow on a gift, fly a kite, mend things.

When I was a little girl, I was taught a song about a ball of white string, in which the white string could fix everything — tie a bow on a gift, fly a kite, mend things. The second verse of the song was about all the things that string cannot fix — broken hearts, damaged friendships — the list goes on. In all of the research I have been doing about Artificial Intelligence (AI), its governance and what it can do, this song has frequently come to mind. Many authors and researchers are doing the equivalent of repeatedly singing the first verse of the song, about all the things that AI can do, without contemplating where AI cannot effectively or, more importantly, should not be used. Probably now nearing the height of the Gartner-hype cycle, AI is often misleadingly touted as being able to fix practically everything. Although it is true that AI will expedite many business processes and engender new ways of acquiring and creating wealth through more sophisticated use of data, for the everyday citizen those benefits are not always apparent.

The reality of what AI can do is very different from what is conveyed, particularly by industry. In some instances, in fact, AI is breaking things in a way and at a speed that is unprecedented. It is impossible for businesses and governments to simultaneously maximise public benefits, service levels, market competition and profitability. Profitability is almost inevitably being prioritised in a neoliberal context, at the expense of democracy, individual freedoms and the voices of civil society and citizens. Many are voicing these concerns, but they are yet to be actively addressed at all stages of contemplation of the use of AI.

AI includes a series of component parts, both software and hardware. AI may include the following: data-based or model-based algorithms; the data, both structured and unstructured; machine learning, both supervised and unsupervised; the sensors that provide input and the actuators that effect output. AI is complicated and encompasses many things. For ease of reference in this chapter, the term ‘AI’ includes all of these components, each of which may require different considerations and limitations. The individual consideration of each component is beyond the scope of this brief chapter, but this complexity is important to hold in mind when considering applications of AI.

This chapter will contemplate the current popular dichotomy between techno-utopians (those who think that technology — including AI — will save the world) and techno-dystopians (who think technology will destroy it). I conclude that there needs to be a greater space in the discourse for questions, challenge and dissent regarding the use of technology without being dismissed as a techno-dystopian. In fact, these voices are required to ensure safe and beneficial uses of AI, particularly as this technology is increasingly embedded in physical systems and affects not only our virtual but also our physical worlds.

The overly simplistic and popular dichotomy often posed is between those who are techno-optimists and techno-dystopians. The reality is far more complex, and creating a notion of ‘friends’ or ‘enemies’ of technology does not foster helpful dialogue about the risks and dangers of developing and using certain applications of AI. Differing interests and profoundly powerful market forces are shaping the conversation about AI and its capabilities. What Zuboff coins ‘surveillance capitalism’ is far too profitable and unregulated to furnish a genuine contemplation of human rights, civil liberties or public benefit.1

Every technology has a history and a context.2 A prominent example from Winner’s book, The Whale and the Reactor, involves traffic overpasses in and around New York, designed by Robert Moses. Many of the overpasses were built low, which prevented access by public buses. This, in turn, excluded low-income people, disproportionately racial minorities, who depended entirely on public transportation. Winner argues that politics is built into everything we make, and that historical moral questions asked throughout history — including by Plato and Hannah Arendt — are questions relevant to technology: our experience of being free or unfree, the social arrangements that either foster equality or inequality, the kinds of institutions that hold and use power and authority. The capabilities of AI, and the way that it is being used by corporations and by governments, continue to raise these questions today. Current systems using facial recognition or policing tools that reinforce prejudice are examples of technology that builds on politics. The difference is that, in non-physical systems, politics are not as easy to identify as in a tangible object like an overpass, although they may be similarly challenging to rectify after they have been built, and equally create outcomes that generate power and control over certain constituents.

Often cited as the birth of AI as an academic discipline is the 1956 Dartmouth conference, which ran over eight weeks, and which was a product of its time.

A list of conference participants, mainly white, wealthy and educated men, meet:

proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer.3

Following this conference, developments in AI moved ahead in fits and starts. The quiet periods are now retrospectively referred to as “AI winters”.4 More recently, successes in game playing and facial recognition, among other advances, have attracted considerable attention, both positive and negative. The most rapid advances, however, have been in the growth of companies such as Google and Facebook, which configure and use data derived from AI for the benefit of tracking their users and providing them with particular features.

Many companies use AI to deliberately pursue anti-regulatory approaches to existing legal structures governing business.5 These structures exist to ensure that society receives tax to improve the physical world for their citizens, to ensure that safety and privacy are upheld, and to express protections and norms (ideally) created by democratically elected representatives. Some companies using ‘disruptive’ AI technologies are deliberately avoiding these regulations.

No ethical limitations will ever prevent these companies from a model designed to deliberately pursue anti-regulatory approaches. In German, the word for disruptive is Vorsprung,6 which literally translates to ‘jump over’. In effect, these companies are jumping over existing regulatory frameworks to pursue market dominance. In some jurisdictions, legal action is being taken to try to mitigate these approaches. In Australia, in May 2019, over 6000 taxi drivers filed a class action lawsuit for lost income against Uber for its deliberate attempt to ‘jump over’ existing laws regulating taxi and limousine licensing. ‘It is not acceptable for a business to place itself above the law and operate illegally to the disadvantage of others,’ said Andrew Watson, a lawyer with the claimants’ firm Maurice Blackburn.7

Facebook, which collects, stores and uses people’s private data, was recently fined US$5 billion for breaches related to the Cambridge Analytica scandal.8 At the time of writing, Facebook’s market capitalisation was approximately US$584 billion.9 Facebook’s share price increased after the fine to a value that would have covered the fine, most probably due to investor relief that it was clear no further regulatory responses were imminent. The fine, which represents about three months of Facebook’s revenue, also shows that regulators are toothless, unserious, or even worse, both.10

It has already been suggested that the use of AI in particular circumstances is not accidental, but rather often a deliberate method to avoid introspection and traditional governance through inexplicable decision-making processes.

‘governance-by-design’ — the purposeful effort to use technology to embed values — is becoming a central mode of policymaking, and … our existing regulatory system is fundamentally ill-equipped to prevent that phenomenon from subverting public governance.11

Mulligan and Bamberger raise four main points. First, governance-by-design overreaches by using overbroad technological fixes that lack the flexibility to balance equities and adapt to changing circumstances. Errors and unintended consequences result. Second, governance-by-design often privileges one or a few values while excluding other important ones, particularly broad human rights. Third, regulators lack the proper tools for governance-by-design. Administrative agencies, legislatures and courts often lack technical expertise and have traditional structures and accountability mechanisms that poorly fit the job of regulating technology. Fourth, governance-by-design decisions that broadly affect the public are often made in private venues or in processes that make technological choices appear inevitable and apolitical.

Each of these points remain valid. Use of AI by governments and corporates alike often masks the underlying political agenda that use of the technology enables. In the case of what has been coined ‘Robodebt’ in Australia, the Federal Government’s welfare department, Centrelink, used an algorithm to average a person’s annual income gathered from tax office data over 26 fortnights, instead of individual fortnightly periods, to calculate whether they were overpaid welfare benefits. Recipients identified as having been overpaid were automatically sent letters demanding explanation, followed by the swift issuance of debt notices to recover the amount.

This method of calculation resulted in many incorrect debts being raised. Recipients of aid in Australia comprise one of the most vulnerable groups in Australia, and even raising these debts without consultation or human interaction arguably caused profound detrimental effects.12 Senator Rachel Siewert, who chaired the Senate Estimates hearings into Robodebt, noted that, ‘[t]here were nine hearings across Australia, and what will always stick with me, is that at every single hearing, we heard from or about people having suicidal thoughts or a severe deterioration in mental health upon receiving a letter.’13

The practical onus at law is on any creditor (in this case, Centrelink) to prove a debt, not for the debtor to disprove it. Automating debt-seeking letters challenges a fundamental law derived from long-standing principles of procedural fairness.14 This is the type of automation that, even if rectified, causes irreversible damage, and is in and of itself a form of oppression. Use of social media to influence elections in the United States and Brexit has been extensively covered, but more recently, similar tools were used in the 2019 Australian Federal election, when advertisements started to appear in social media feeds regarding a proposed (and utterly fictitious) death tax by the opposition Australian Labor Party.15 Although the impact of this input on the Australian election is not completely clear, it is known that nearly half of Gen Z obtain their information from social media alone, and that 69% of Australians are not interested in politics.16 These kinds of audiences are prime targets for deliberately placed, misleading social media advertisements, based on algorithmically generated categorisations.

If we could agree on clear parameters about how we build, design, and deploy AI, all of the normative questions that humans have posed for millennia would remain.17 No simple instruction set, ethical framework, or design parameters will provide the answers to complex existential and philosophical questions posed since the dawn of civilisation. Indeed, the very use of AI, as we have seen, can be a way of governing and making decisions.

Joseph Weizenbaum was the creator of ELIZA, the first chatbot, named after the character in Pygmalion. It was designed to emulate a therapist through the relatively crude technique of consistently asking the person interacting to expand upon what they were talking about and asking how it made them feel. A German-American computer scientist and professor at MIT, Weizenbaum was disturbed by how seriously his secretary took the chatbot, even when she knew it was not a real person.

Weizenbaum’s observations led him to argue that AI should not be used to replace people in positions that require respect and care.18 Weizenbaum was very vocal in his concerns about the use of AI to replace human decision making. In an interview with MIT’s The Tech, Weizenbaum elaborated, expanding beyond the realm of mere artificial intelligence, explaining that his fears for society and its future were largely because of the computer itself. His belief was that the computer, at its most base level, is a fundamentally conservative force — it can only take in predefined datasets in the interest of preserving a status quo.

More recently, other writers have expressed similar concerns.19 Promises being made by technology companies are increasingly being questioned in light of repeated violations of human rights, privacy and ethical codes. The United Nations has found that Facebook played a role in the generation of hate speech that resulted in genocide in Myanmar.20 The Cambridge Analytica scandal has, by Facebook’s own estimation, been associated with the violation of the privacy of approximately 87 million people.21 Palantir, an infamous data analytics firm, is partnering with the United Nations World Food Program to collect and collate data on some of the world’s most vulnerable populations.22 Many questions are being raised about the role and responsibility of those managing the technology in such situations, not just in times of conflict,23 but also in times of peacekeeping,24 including a United Nations’ response to identify, confront and combat hate speech and violence.25

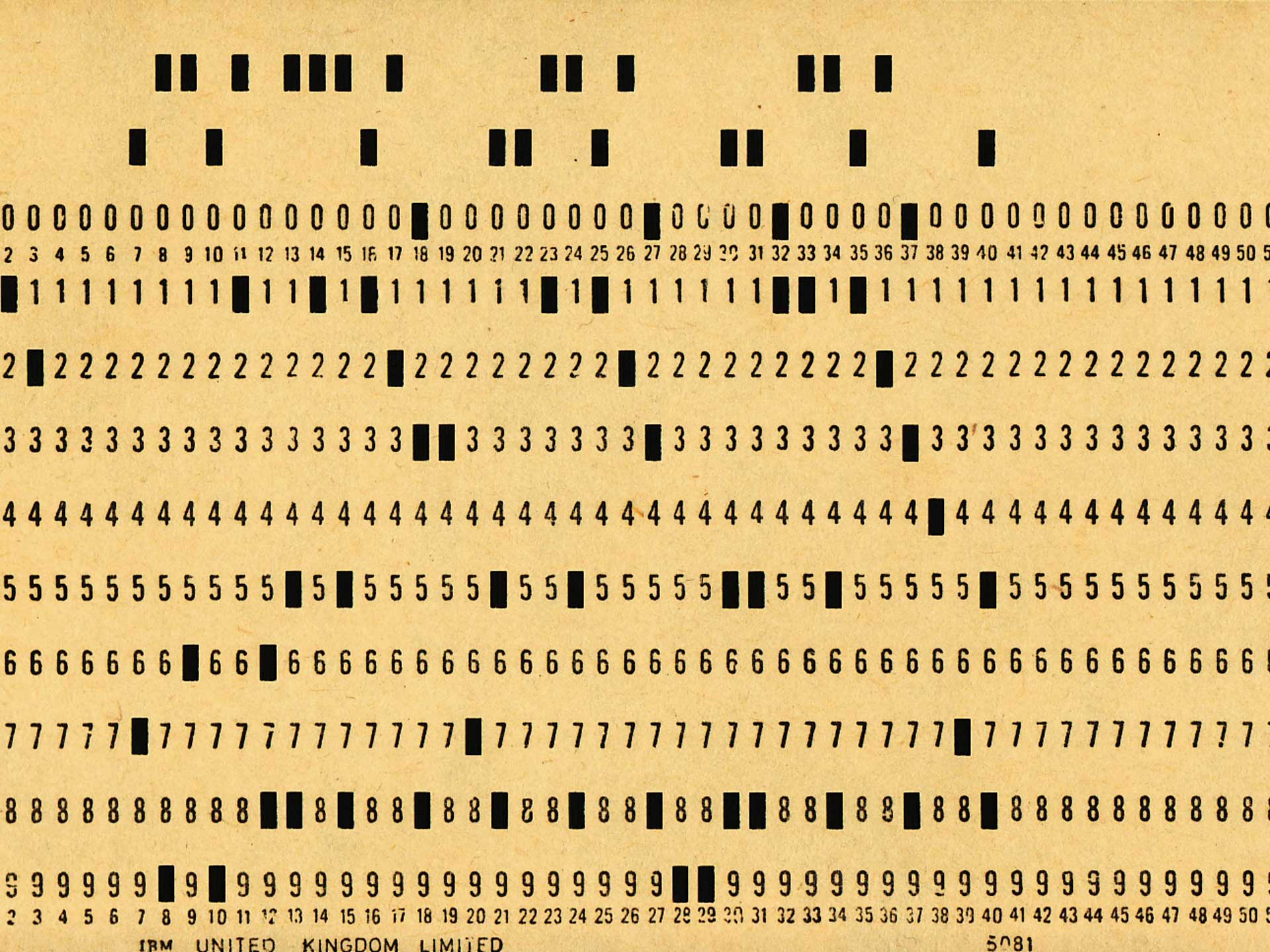

Weizenbaum was also a product of his time, having escaped Nazi Germany with his family in 1936. His knowledge, and personal experience, of the use of data to make the Holocaust more efficient and effective inevitably shaped his perception and thinking about the wider use of data and computers. The first computers of IBM were used to create punch cards outlining certain characteristics of German citizens.26 IBM did not enable the Holocaust, but without IBM’s systematic punch card system, the trains would not have run on time, and the Nazis would not have been anywhere near as clinically ‘efficient’ at identifying Jews. For this reason, Germans still resist any national census, and they also resist a cashless society such as that which Sweden has wholeheartedly adopted.

Hubert Dreyfus expressed similar concerns. His book, What Computers Can’t Do, was ridiculed on its release in 1972, another peak of hype about computing.27 Dreyfus’ main contention was that computers cannot ‘know’ in the sense that humans know, using intuition, contrary to what IT industry marketing would have you believe. Dreyfus, as a Professor of Philosophy at Berkeley, was particularly bothered that AI researchers seemed to believe they were on the verge of solving many long-standing philosophical problems within a few years, using computers.

We are all products of our time and each of us have a story. My great- uncle was kept in Sachsenhausen in solitary confinement for being a political objector to participating in World War II. I was partially raised in Germany during the tumultuous late 1980s, and the tensions and histories are etched into some of my earliest memories. I returned to Germany in the late 1990s to watch the processing of history on the front page of every newspaper of the day. Watching today how data is being collected, shared, traded and married with other data using AI, knowing its potential use and misuse, it is difficult for me not to agree with Weizenbaum that there are simply some tasks for which computers are not fit. Beyond being unfit, there are tools being created and enabled by AI that are shaping our elections, our categorisation by governments, our information streams, and, albeit least importantly, our purchasing habits. My own history shapes my concern about how and why these systems and tools are being developed and used, a history that an increasing proportion of the world do not remember or prefer to think of as impossible in their own time and context.

Although AI as we understand it was conceived and developed as early as 1956, we are only just coming to understand the implications of rapid computation of data, enabled by AI, and the risks and challenges it poses to society and to democracy. Once data is available, AI can be used in many different ways to affect our behaviours and our lives. Although these conversations are starting to increase now, Weizenbaum and Dreyfus considered these issues nearly five decades ago. Their warnings and writings remain prescient.